A practical guide for business leaders navigating multiple AI tools across departments, rising costs, and growing security risk

Key Highlights

- AI tool sprawl occurs when multiple, disconnected AI tools spread across an organization without a clear AI strategy, creating chaos.

- This sprawl leads to significant hidden costs, fragmented workflows, and productivity losses for your teams.

- Unmanaged AI tools introduce serious security risks, including data breaches and compliance failures due to poor data management.

- Shadow AI, or the unapproved use of AI tools, worsens the problem by creating governance blind spots.

- You can regain control through a unified AI strategy, regular audits, and implementing AI orchestration.

The Real Cost of AI Tool Sprawl (And How to Fix It)

If your organization has been experimenting with AI over the past year, you’ve probably noticed something: What started as a few team members trying ChatGPT has turned into a patchwork of subscriptions, tools, and workarounds across departments, when what most organizations really need is multiple AI models in a single dashboard with centralized oversight.

You’re not alone. And it’s probably worth addressing sooner rather than later.

When we talk to IT leaders, operations managers, and department heads, we hear the same story again and again. The enthusiasm for AI is real. The results (when they happen) are genuinely impressive. But the infrastructure underneath? That’s where things get complicated.

Let’s talk about what’s actually happening in organizations right now, why it matters, and what a better path forward might look like.

The Pattern We’re Seeing (And Why It Might Be Costing You More Than You Think)

Here’s how it typically unfolds:

Someone in marketing signs up for an AI writing tool. Customer service starts using a chatbot platform. Finance tries a data analysis tool. Before long, you’ve got six different AI subscriptions, limited visibility into how they’re being used, and no consistent approach to security or compliance.

That’s AI tool sprawl: the uncontrolled growth of different AI tools and platforms across your company. It usually isn’t one big decision—it’s the accumulation of lots of small, reasonable choices made by teams eager to move fast in a fast-moving vendor ecosystem. Each tool made sense in isolation. But together? They create challenges.

The Budget Problem

You’re likely paying for redundant capabilities across multiple platforms. One team is paying $30/seat for AI writing. Another is paying $25/seat for a different AI tool that does largely the same thing. This situation isn’t caused by a single decision; multiply that across departments, and suddenly your “experimental” AI investments is a line item that needs justification.

And it’s not just seats. Sprawl often means duplicate contracts, overlapping usage-based fees, and scattered renewals—without a clear view of which tools are actually delivering ROI.

The Security Problem

Every new tool becomes another surface area to secure, another vendor to vet, and another set of data-handling policies to review. Instead of one controlled environment, you end up with scattered API keys, disconnected vendor dashboards, and inconsistent safety controls.

That creates real risk: more places where sensitive data might be pasted, more integrations to monitor, and more chances for misconfigured access or unclear retention policies as the number of tools increases. Your security team may be playing whack-a-mole and struggling to keep up.

The Governance Problem

It’s difficult to create company-wide AI policies when every department uses different tools with varying capabilities, terms of service, and levels of compliance. Without a central platform team or baseline governance, each department improvises its own toolchain to move quickly.

So policies either don’t get created—or they become harder to enforce consistently because the controls (and the data paths) are fragmented.

The Productivity Problem

When people switch between tools to get work done (one AI for writing, another for analysis, a third for code), the cognitive load adds up. Context switching can kill momentum.

And because everything is disconnected, you lose shared workflows: prompts, standards, best practices, and learnings don’t travel easily across teams—so you pay the “reinvent the wheel” tax over and over.

This isn’t a failure of your teams. It’s a natural consequence of rapid innovation meeting organizational structure. But it’s worth addressing thoughtfully.

What Businesses Actually Need (Or at Least, What We’ve Seen Work)

After working with organizations across industries, from regulated environments like healthcare and local government to fast-moving manufacturing companies, we’ve identified best practices that tend to matter most when it comes to sustainable AI adoption:

1. AI Consolidation Without Compromise

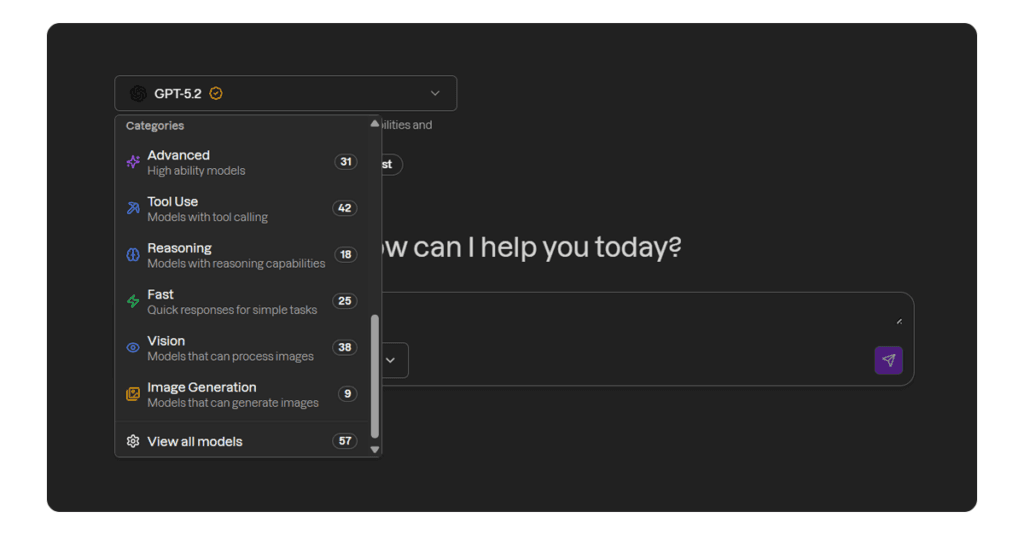

In our experience, organizations benefit from access to multiple AI models (because different models are legitimately better at different tasks) without having to manage multiple platforms. The approach that tends to work gives teams the flexibility to choose the best model for each job while providing leadership with centralized oversight, allowing them to avoid spending significant time on maintaining these platforms.

The key is making that access simple: multiple AI models in one dashboard, so teams can switch between AI models based on the task—without bouncing between apps or losing governance.

2. AI Security That’s Built In, Not Bolted On

Security works best when it’s not an afterthought, particularly when establishing consistent security policies. When AI is part of your daily workflow (handling sensitive information like customer data, internal documents, strategic planning), it helps to know that every interaction meets the same rigorous standards. Not ‘we’ll figure out security later.’ Not ‘just use it in low-risk situations.’ Every interaction. Every time.

3. AI Governance That Actually Works

Good governance isn’t about creating barriers– it’s about creating clarity and establishing the right structure. Who can access what? How is AI being used across the organization? What happens when someone needs help or hits a limitation? If these questions are difficult to answer, governance becomes challenging to maintain.

4. Room to Grow Without Starting Over

Your AI needs today will likely differ from what they’ll be in six months. Platforms that grow with you (adding users, expanding permissions, integrating new capabilities) tend to work better than solutions that require a complete overhaul every time your strategy evolves, especially as usage scales. That’s especially true when your teams can switch between AI models as needs evolve without re-platforming every time a different model fits the work better.

5. AI Customization That Reflects Your Business

Generative AI is powerful, but AI that understands your business can be transformative. The ability to train models on your specific policies, processes, and brand voice can mean responses that actually sound like they came from your organization– not from a generic chatbot.

6. Support That Understands Context

When something breaks, when a team needs training, or when you’re trying to figure out the best way to roll out a new use case in this AI era, it helps to have support from people who understand both the technology and the business implications. “Check the documentation” may not be enough when you’re making decisions that affect your entire organization.

A Different Approach: Multi-Model AI Platforms

This is why we provide AtNetHub.ai.

Centralization doesn’t have to mean limiting choice– it can mean standardizing on an AI platform with multiple models that your whole organization can use safely.

Not because we think AI needs another platform, but because we kept hearing the same pain points from businesses trying to do AI adoption responsibly. They were looking for a way to give their teams access to the best AI capabilities available while maintaining the control, security, and visibility their organizations required.

What Does A Multi-Model AI Platform Look Like?

One platform. 57+ models. Your team gets access to ChatGPT, Claude, and dozens of other leading AI models from a single interface. Need a model that’s great at code? It’s there. Need one that excels at analysis? Also there. Switch between them based on the task, not based on which subscription you happen to have.

One bill. Potential savings. Instead of managing multiple AI subscriptions across departments, you can consolidate them into a single platform. Simpler procurement. Clearer budgeting. And because you’re not paying for redundant capabilities, many organizations find they reduce overall AI spend while actually expanding what their teams can do.

Security that’s already handled. AtNetHub.ai operates under the same security and compliance framework that governs all AtNetPlus services. Your data stays protected. Your policies stay enforced. Whether you’re in a regulated industry or just take security seriously, you can deploy AI knowing the foundation is solid.

Central management. Department-level flexibility. From one dashboard, you can add users, adjust permissions, track usage, and monitor activity across your organization. But departments still have the flexibility to use AI in ways that make sense for their work– you’re providing structure, not micromanaging.

AI that knows your business. You can train models on your internal documentation, policies, and processes. That means responses that align with how your organization actually operates– consistent, accurate, on-brand. Not generic. Not close enough. Right.

Real support from real people. We provide deployment assistance, training sessions, troubleshooting, and strategic guidance. Because we know that rolling out AI successfully isn’t just about the technology– it’s about helping your people use it effectively.

What This Could Mean for Your Organization

Option one: More tools, more subscriptions, more complexity from various SaaS vendors. You keep adding capabilities, but you also keep adding overhead. Your AI spend increases. Your security team gets more stressed. Your policies struggle to keep up. And eventually, someone asks the hard question: “Are we actually getting value from all this?”

Option two: You consolidate, standardize, and scale. You give your teams the flexibility they need within a framework that actually works. You potentially reduce costs while expanding capabilities. You deploy AI with confidence because security and compliance are built in, not afterthoughts. You can actually answer questions about how AI is being used across your organization.

The choice might seem obvious. But making it happen requires the right infrastructure.

Where to Start (If This Resonates)

If you’re reading this and thinking, “This sounds like what we need,” here’s what we’d suggest:

First, consider auditing what you have. Take stock of all the AI tools currently in use across your organization. Not just the official ones– the Shadow IT ones too. What’s being used? Who’s paying for it? What’s it being used for? It’s easier to create a strategy when you know your starting point.

Second, identify your non-negotiables. What security standards must be met? What compliance requirements apply? What level of visibility would be helpful for AI usage? These can become your baseline requirements for any platform you consider.

Third, consider talking to the people who’ll actually use it. It often helps to avoid making AI decisions in a vacuum. Talk to department heads, team leaders, and the people on the ground who’ll use these tools daily. Understand their needs, their concerns, and their current workarounds.

Finally, look for a platform that meets both business and user needs. That’s the balance that tends to matter most– giving your organization the control and oversight it requires while giving your people the tools and flexibility they need to do their best work.

The Bottom Line

AI adoption doesn’t have to mean chaos; It doesn’t have to mean sprawling costs, cost overruns, security headaches, or governance nightmares.

With the right approach (centralized management, broad capability, built-in security, and real support), you can build the AI ecosystem that helps your organization move fast, work smart, and stay in control.

That’s what AtNetHub.ai is designed to do. Not just provide access to AI, but provide the structure and support that can make AI adoption sustainable, secure, and genuinely valuable for your business.

Curious to explore what centralized AI management could look like for your organization? Reach out to our team to discuss your specific needs, challenges, and goals. No hard sell– just a practical discussion about whether this approach makes sense for where you are and where you’re heading.

Frequently Asked Questions

How can small businesses address AI tool sprawl effectively?

Small businesses can combat AI agent sprawl by centralizing their AI tools through an AI orchestration platform. Start by auditing current tools, establishing clear best practices for adoption, and choosing a unified platform that offers visibility and control, even with limited IT resources.

What are the first signs of unmanaged shadow AI in a company?

The first signs of shadow AI include expense reports with unrecognized software subscriptions, irregular usage patterns on network logs, and employees mentioning tools unfamiliar to IT. These are key indicators that your internal landscape is becoming fragmented and could be at risk of data leakage.

Is technical consolidation the best way to eliminate costly AI tool sprawl?

Technical consolidation is a powerful step, but it’s most effective as part of a broader AI strategy. Combining it with a unified access layer and an AI orchestration platform provides the governance and visibility needed to not just eliminate current AI sprawl but also prevent it from recurring.

What causes AI tool sprawl in organizations?

AI tool sprawl is caused by decentralized decision-making, where teams independently adopt new tools for specific use cases. This is fueled by fast-moving vendor ecosystems and a lack of central governance from platform teams, leading to a fragmented and overlapping collection of AI solutions.

What risks or challenges can result from unmanaged AI tool sprawl?

Unmanaged AI sprawl introduces major security risks, including data breaches and compliance failures. It also leads to financial waste, productivity losses due to fragmented workflows, and inconsistent behavior across applications, undermining your governance efforts and creating operational chaos.

What is the difference between AI tool sprawl and shadow AI?

AI sprawl is the broad, uncontrolled proliferation of many AI tools, both approved and unapproved. Shadow AI is a subset of sprawl, specifically referring to tools used without IT’s knowledge or approval, creating major governance gaps as they operate outside established safety policies and rules.

What is shadow AI, and how is it related to AI sprawl?

Shadow AI is the use of AI tools by employees without official approval from IT teams. It is a direct contributor to AI sprawl, expanding the unmanaged internal landscape and increasing the risk of sensitive information being exposed through unsecured, unvetted applications.

What role does governance play in preventing AI tool sprawl?

Governance plays a critical role in preventing AI sprawl by establishing clear rules for AI adoption and usage. Through regular audits, consistent safety policies, and centralized oversight from IT teams, governance ensures that all tools are secure, compliant, and aligned with business objectives early.